Real Time Transformations: Introduction

This guide gives you an overview of the general concept of Real Time Transformations that is introduced in the Replication Cockpit.

General Concept Real Time Transformations

Real Time Transformations represent a new concept that enables the transformation of unprocessed records. This allows avoiding to drop/recreate the data that has already been transformed, with the benefits of:

Fast and effective transformations

Robust and error-free transformations

Predictable runtimes

You are probably aware of the functionality of Delta Extractions within Celonis - Real Time Transformations can be seen as the corresponding equivalent for transformation purposes.

The existing transformation approach (to which we refer to as Batch Transformations from now on) always calculates the resulting tables based on the full data that is available in Celonis - which is slow, unstable and volatile. In contrast to that, the idea of Real Time Transformations Transformations is to only consider the data that was changed since the last execution - with the goal to limit the calculation overhead.

Combining Data Jobs with the Replication Cockpit

The Replication Cockpit can handle the Delta Load functionalities for Extractions and Transformations. All the remaining capabilities still need to be configured via the Data Jobs.

Full Load | Delta Load | |

|---|---|---|

Extraction | Data Job | Replication Cockpit |

Transformation | Data Job | Replication Cockpit |

Data Model Load | Data Job | Data Job |

Batch Transformation vs Real Time Transformation

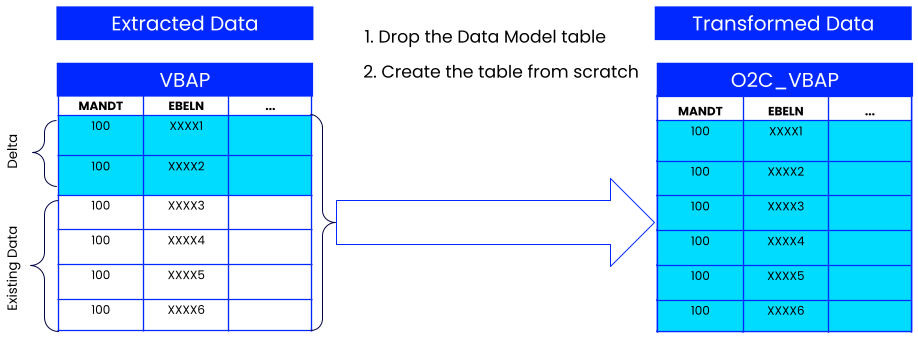

To better understand the difference, let's have a look at the graphics below:

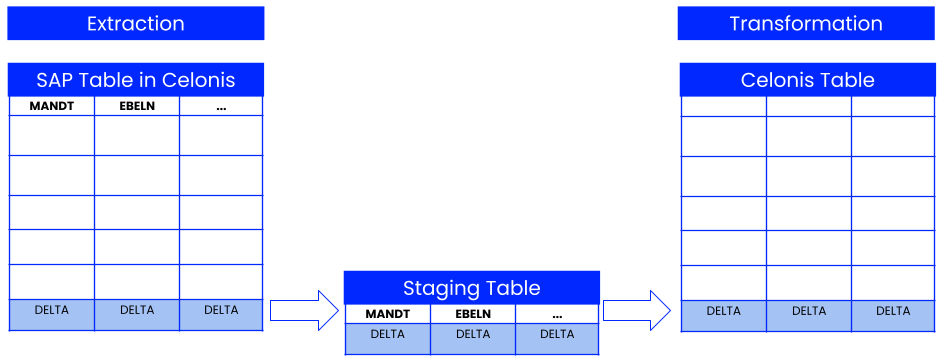

Extraction

The table on the left represents the table that is extracted from SAP (e.g. VBAP). With the Real-Time Extraction capabilities, we are able to perfectly identify the delta, meaning the subset of records that have been changed since the last execution. In our example the delta is represented by the last row in the table.

With every Extraction that is executed, only the identified delta is extracted. The respective records are then added to the table.

The table on the right represents the table that is created with a transformation statement in Celonis (e.g. the Activity Table or the Data Model table O2C_EKKO).

Batch Transformation

With the Batch Transformation approach, the table of the transformation is dropped and re-created from scratch for every execution.

All records are being processed with each execution.

|

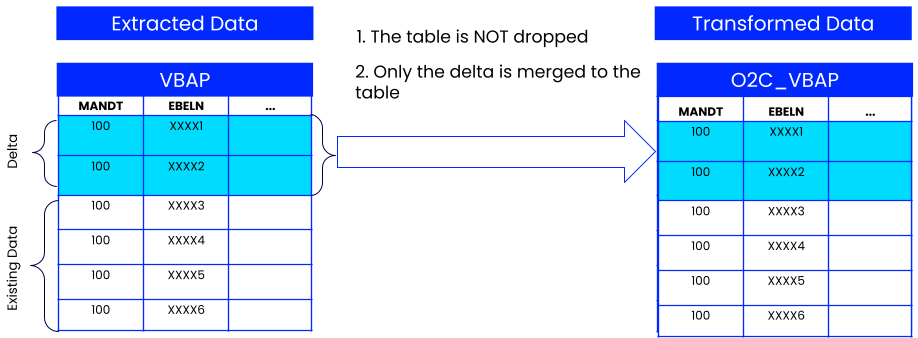

Real Time Transformation

With the Real Time Transformation approach, this table is not dropped anymore. Instead, the new/changed entries (Delta) are inserted or merged to the table.

The blue part represents the records that are being processed as part of the Real Time Transformation.

|

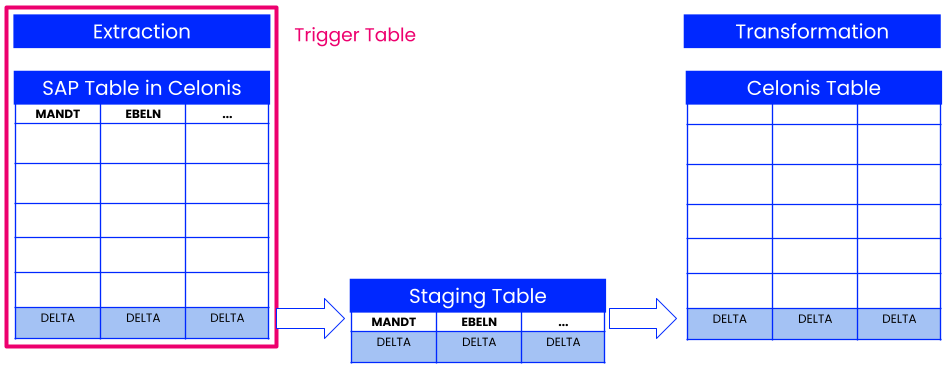

Introducing Trigger Tables

Real Time Transformations are executed each time new records are extracted to a specific table. This means that you need to map each transformation statement to a table whose extraction should trigger the transformation. This table is called a Trigger Table. In the Replication Cockpit, transformations are always defined at table-level.

Taking into account the graphic below, the SAP table on the left-hand side is the trigger table of a transformation. An update in this table triggers the execution of the transformation statement.

|

Note

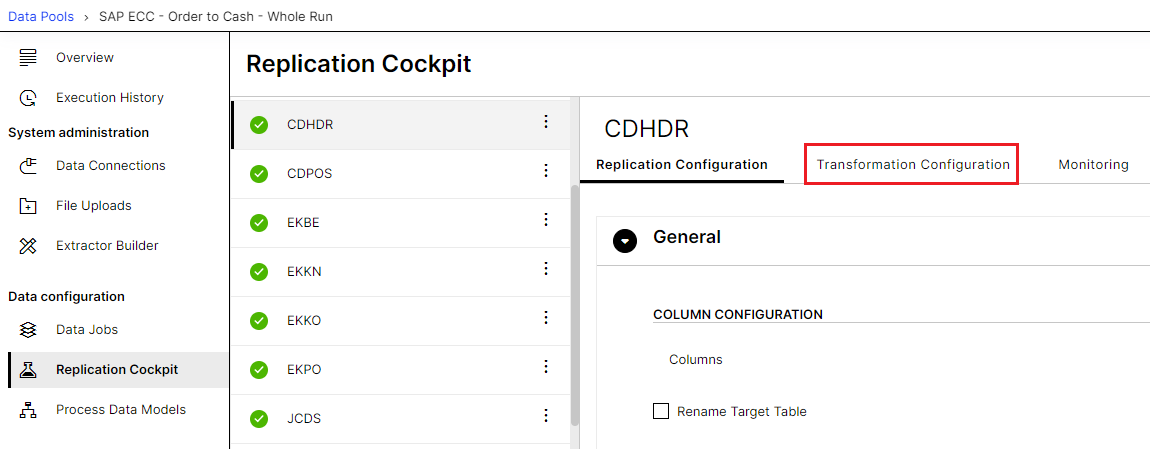

In the Replication Cockpit, transformations are always defined at table-level. A transformation can be defined in the 'Transformation Configuration' tab of a table.

Example:

A transformation statement needs to be triggered by the Table CDHDR. This means every change in CDHDR should lead to the execution of the transformation.

CDHDR is the trigger table of that transformation

=> The transformation is defined on the table CDHDR.

|

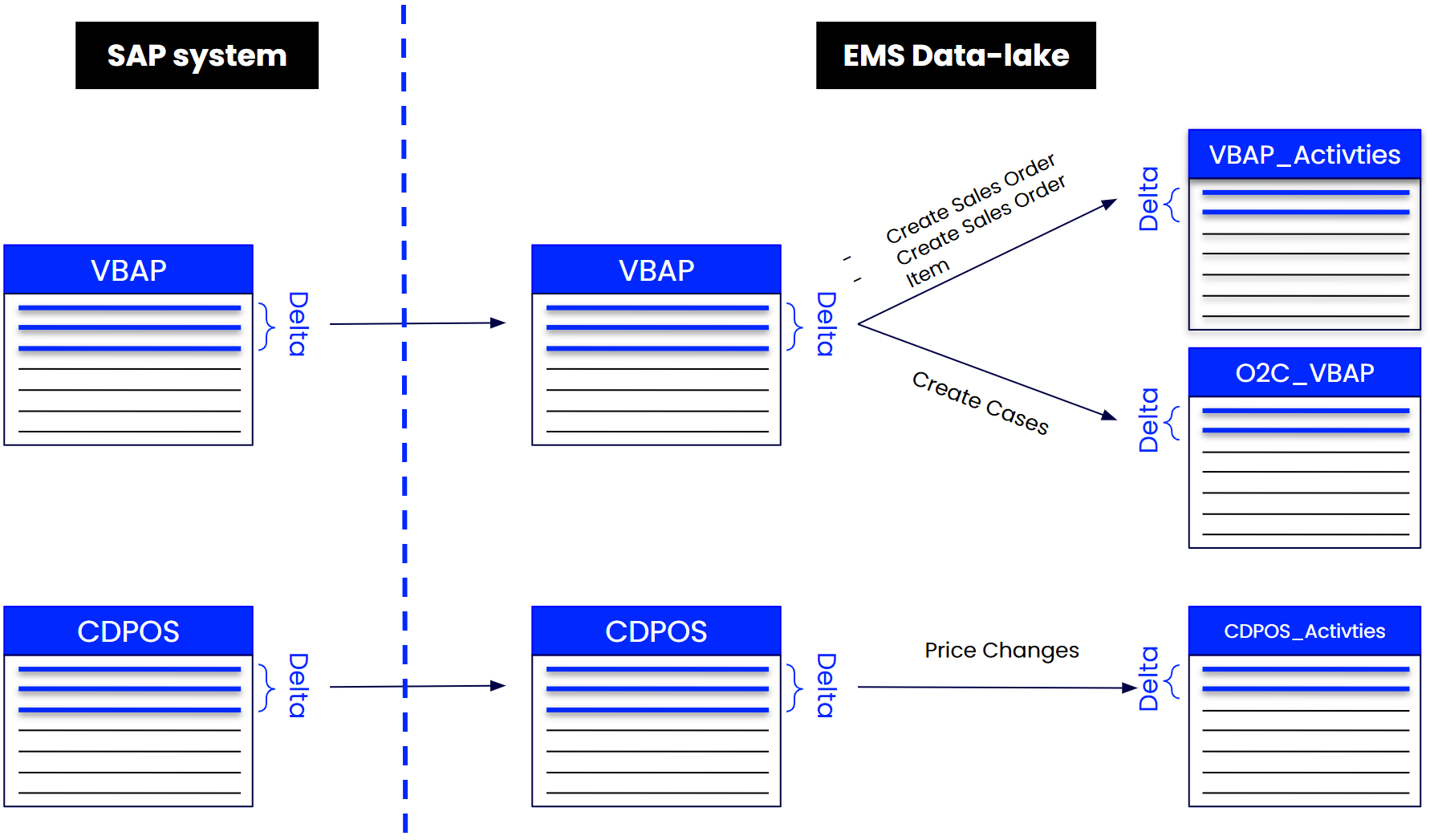

The following graphic shows an exemplary data flow for the source tables VBAP and CDPOS and how they trigger respective transformation statements.

In this example, VBAP triggers 2 activities and one Data Model table while CDPOS triggers 1 activity.

|

Introducing Staging Tables

The necessity of Staging Tables is a logical consequence of the introduction of trigger tables.

Staging tables are intermediary tables that allow you to execute Real Time Transformations. They contain only the specific subset of records that have been changed since the last execution. For every table that is extracted in the Replication Cockpit, a separate staging table exists. The idea is that you are selecting from the Staging Table instead of the full data table.

|

The staging tables are cleaned up after each successful transformation. Therefore, the overall flow looks as follows:

Extraction is started

Extraction is finished

Delta is inserted into Staging Table

Transformation is started

Transformation is finished

Staging Table is cleared

Staging Tables have a specific syntax with which they can be accessed. In fact, for every table that is extracted via the Replication Cockpit, 2 different staging tables exist:

Transform Staging Table: contains only the records that have been created or updated after the last extraction (e.g. for KNA1 it is _CELONIS_TMP_KNA1_TRANSFORM_DATA). This table is deleted after each successful Replication execution.

Delete Staging Table: contains only the records that have been deleted from the table in the source system (e.g. for KNA1 it is KNA_DELETED_DATA). This table needs to be cleared manually after the data was used. Deletions are currently not part of the standard Real Time Transformation approach, but they can be added if needed.

Note

The staging table is only accessible in the transformations that are defined on the respective table.

Example:

A transformation is triggered CDHDR and therefore defined on the table CDHDR

In that transformation, you are able to reference the staging table of CDHDR (_CELONIS_TMP_CDHDR_TRANSFORM_DATA), but no other staging tables.

To bring a transformation into the Real Time transformation logic, the following three steps are necessary:

Identify the Trigger Table

Define the Transformation on this Trigger Table (→ in the Replication Cockpit transformations are always defined at table-level).

Select from the corresponding Staging Table within the transformation

These three steps are explained in much more detail in the two technical set-up guides about Real Time Transformations for Data Model Tables and for Activities.

Introducing Dependencies

Warning

If there is a join condition on a table that is not defined as a “Dependency”, then the chronological consistency of the records among the tables is not guaranteed.

Another important concept that is crucial for the functionality of Real Time Transformations is the Dependency Logic that got introduced in the Replication Cockpit. It automatically considers a special logic so that only the records of the staging table are taken into account for which corresponding updates in the dependent tables exist.

The dependent tables of a transformation are all tables that are required to be up-to-date at the point when the transformation is executed. The trigger table itself cannot be a dependent table as it always has the most recent data when the transformation is started (the transformation follows consecutively after the extraction).

However, there can be many other tables that are referenced within the transformation statement, but whose extractions are running in their own separate cycles (in the Replication Cockpit). The dependencies that are defined at table-level address these issues and make sure that no important data is missed.

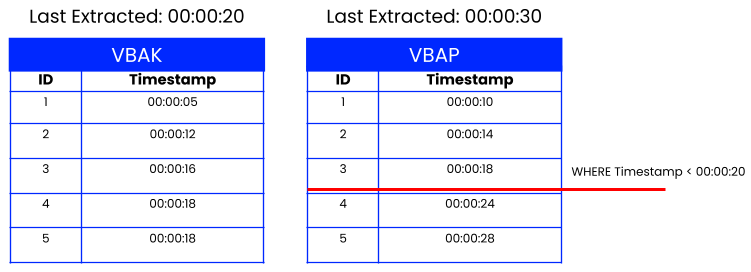

Example

A transformation is triggered by the table VBAP (Sales Document Item)

In the transformation, we also reference the table VBAK (Sales Document Header)

We need to make sure that VBAK and VBAP are "synchronized"

=> We define a dependency on VBAK for the table VBAP. The Replication Cockpit automatically handles this synchronization in the background.

How it works in more detail

Last Extraction date of VBAK: 00:00:20

Last Extraction date of VBAP: 00:00:30

=> Minimum last extraction date: 00:00:20

=> In the staging table, only records before the minimum extraction date (00:00:20) are processed

=> The later records (between 00:00:20 and 00:00:30) will be processed as soon as the dependent table (VBAK) gets extracted again (after 00:00:28)

|

Which tables to choose as dependent tables?

All tables that are being inner joined on (or referenced in a where exists statement) should be defined as a dependent table. Tables that are referenced with left/right outer Joins can be added as well - but it is not required.

The definition of the dependencies is explained in much more detail in the two technical set-up guides about Real Time Transformations for Data Model Tables and for Activities.