Extraction task best practice

When creating and configuring your extraction tasks, we have the following best practice recommendations:

The purpose of primary keys are to ensure data integrity by providing a unique identifier for every record. Before extraction, as applicable for your data source, you must define the primary key for each extraction job.

Note

In some extractors, such as with SAP or Oracle, primary keys are taken directly from the source system. In others, such as Microsoft SQL Server (MSSQL), you may need to assign them manually.

Setting the primary key in advance plays a critical role in optimizing extraction behavior for different load types:

Full Loads: Primary keys allows the underlying database to set the indexes properly, which improves the overall performance.

Delta Loads: Primary keys prevents duplicate records and data inconsistency.

To assign a primary key in an extractor, click on the Configure button, and then select the required columns as Primary.

Important

When assigning primary keys, always ensure the selected column or combination of columns uniquely identifies each record and does not allow duplicates.

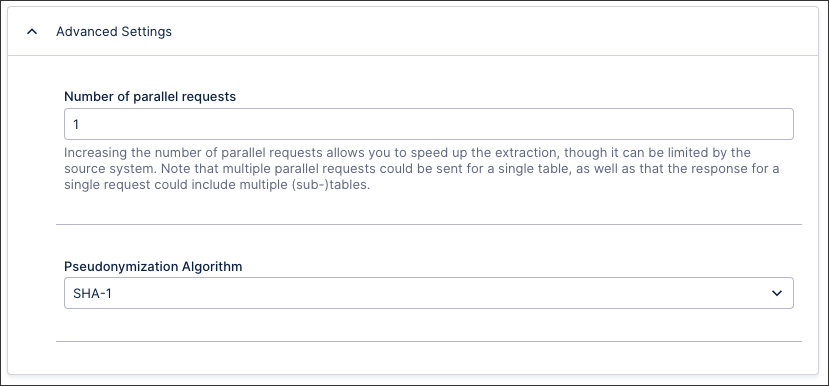

You can enable parallel table extraction for existing connections or when adding a new cloud connection via the advance settings of your data connection. You can extract a maximum of ten tables in parallel. Increasing the number of parallel requests allows you to speed up the extraction, though it can be limited by the source system. Note that multiple parallel requests could be sent for a single table, as well as that the response for a single request could include multiple (sub-)tables.

|

Parallel SAP table extractions

For SAP extractions, we check the number of parallel extractions per hostname. Even if you define multiple connections or multiple extraction jobs, the number of parallel table extractions per hostname globally limits the parallelism. The number of parallel running jobs does not increase the limit of parallel running table extractions but only generates a queue. To achieve a higher throughput, you can slowly increase the value of parallel extractions up to a maximum of 30. Always check the extraction times, CPU load and memory usage on the source system and on the extractor server. CPU load and memory usage will increase on both systems due to higher parallelism and overload will make the extraction more inefficient.

Parallel JDBC table extractions

For JDBC extractions, we check the number of parallel extractions per job. By running multiple jobs at the same time, we can achieve many times the maximum parallel table extractions defined in the JDBC connection. This makes load planning more difficult. It is therefore advisable to use clever time management to avoid configuring overlapping JDBC extractions per source system and Extractor server and instead slowly increase the number of parallel table extractions without overloading CPU and memory on the source system and on the extractor server.

When creating joins in your extraction, you use child tables and parent tables:

Child tables: To be extracted table.

Parent tables: Joined table to filter the child table.

These are then executed in the following order:

The extraction will first apply the indicated filters on the parent table as visible on the right hand side.

The join of the parent table to the child table is conducted. This join is based on java and is mostly comparable with an inner join in SQL.

The additional filters are applied to the table resulting after the join.

You then have the following additional information:

"Use primary key" means the parent primary key.

If you join a child (n) table to a to be extracted parent (1) table on the primary key the extracted table will contain duplicates.

If there are several parent tables, the parents are filtered and then joined top down, before the resulting table is joined to the child.

SAP ECC stores so-called "transparent" and "cluster" tables. The extraction of cluster tables requires significantly more time than the extraction of transparent tables. Therefore, cluster tables should be excluded/replaced if possible.

For more information, see: Replacing SAP cluster tables (BSEG)

Delta filters are used to define which entries in the table are loaded in the delta load. Best practice is to use dynamic parameters with the operation type FIND_MAX for delta filters as they indicate the maximum value of a defined column. If the indicated table does not exist in the Celonis Platform or is empty, the DEFINED VALUE is utilized. If this VALUE does not exist, the DEFAULT VALUE is utilized.

During a full extraction, Celonis checks the size of the actual contents in any VARCHAR column that is at least 64 characters wide and resizes the column as needed to fit the contents. This significantly improves performance since tables with oversized columns can lead to performance issues during transformation and data loads. For delta extractions, columns are automatically enlarged if necessary.

As a result, the column size can change depending on the content. If your transformation code relies on the original column widths, you can override this feature at the beginning of your transformation phase such as:

ALTER TABLE table_name ALTER COLUMN colum_name SET DATA TYPE VARCHAR (original column size);

When creating new tables during the transformation phase, it is better to use a dynamic column length:

CREATE TABLE new_table AS SELECT column1, column2 FROM table2;

Rather than a static one, such as:

CREATE TABLE new_table ( column1 VARCHAR(original column size) column2 VARCHAR(original column size) ); INSERT INTO new_table (column_name) SELECT column1, column2 FROM table2;

The Celonis Platform limits the maximum length of characters in a column to 65,0000 bytes. The actual number of characters stored will vary depending on the specific characters and the encoding used.

If your source system contains more than 65,000 bytes in a specific column, the data will be automatically truncated, and the extraction will finish successfully.