Data preparation troubleshooting

Imported Data Connections: Missing data

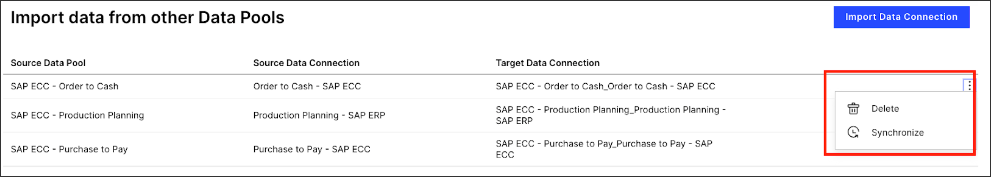

It could be that there are missing tables or columns in the source tables that are referenced in the global jobs. If that is the case, you should extract and or/transform these tables/columns inside of the respective data pools and their jobs. Once you create new tables or new columns in those source pools, you will need to resynchronize the data connection inside the imported data connections section of the IM pool before it becomes available.

|

Missing Data Pools / Data Pools are not Implemented

It could be that your Celonis Platform team has not implemented O2C, PP and/or P2P, hence their data connections cannot be exported. If that is the case there are two options to be considered.

Option 1 (recommended): Install the different data pools and run the necessary extractions and jobs for the tables required by the IM Connector. You can see the list of required transformed tables in the respective P2P, O2C and PP global jobs. For the eventlogs, the most important activities that should be validated are those surrounding the goods receipts/issues of materials, the creation dates of the documents, and the start and finish dates of production.

Option 2: Install the necessary data pools and copy the necessary extractions and transformations into the IM data pool. This is not recommended as it makes future project implementations more troublesome.

Transformation Templates across Multiple Source Systems

If you are using multiple source systems in the inventory management jobs, it could be that some systems require different logic to other systems, or that some systems do not have the same fields as others. This is completely fine, and there is a lot of flexibility in the event collection process to support this. Below are a few scenarios that might come up.

Scenario 1: Different Logic for the IM_Activities Consumptions

It could be that one source system uses different parameters to another source system when considering which movement types are considered as consumptions or replenishments. If this is the case, the recommendation is to:

Create a new transformation (non-template) above the IM_Activties transformation, and inside this transformation create a temporary table with the respective consumption logic for the system.

Update the IM_Activities table such that the temporary table above is also used in the consumption logic. For example by adding a left join to the temporary table and referencing the temporary table in the consumption_qty field for the IM_Activities table creation.

Save this transformation. As it is a template transformation, it will automatically be applied to all other data jobs that make use of it.

Ensure you add the custom logic inside of non-template transformations across the other data jobs in the other source systems.

This is just an example, but very similar cases occur all of the time. It is important to think about smart ways to avoid removing the templates, as those templates make maintenance of the overall transformation very easy. You can then maintain the source system specific logic in one non-template transformation without accidentally editing the template transformation for the IM_Activities (or any other table).

Scenario 2: Tables are missing in one source system compared to another

It is also very possible that one source system doesn’t contain the same tables as the other (think about cases where one source system is for Procurement and Production, and the other source system for Sales and Distribution). This is completely fine, and can be resolved easily by:

First, in the source system specific data job, simply disable that transformation.

Then in the global jobs, remove (or do not add in the first place) the union all to that source system.

Scenario 3: Columns are missing in one source system compared to another

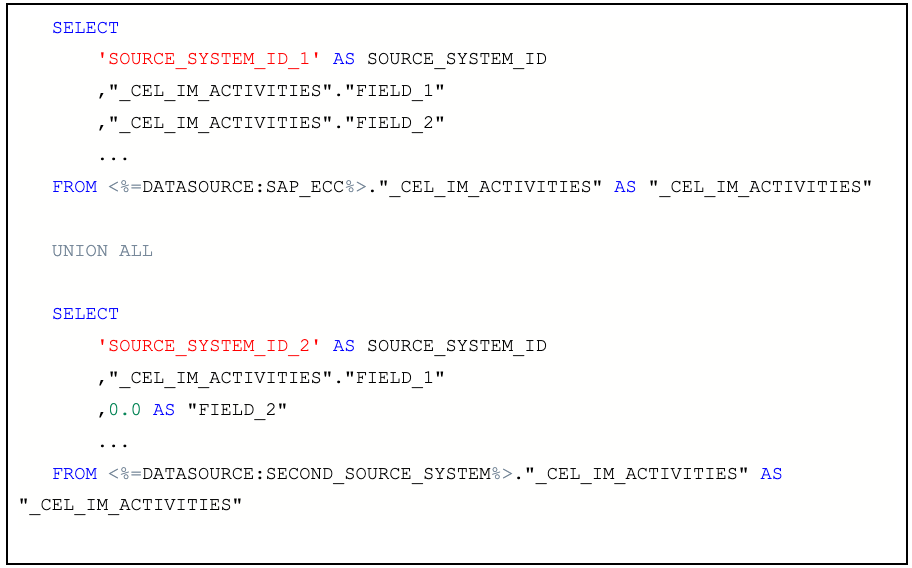

The third scenario is that one table has more fields than the other, for example additional attributes specific to the business use cases of that source system. If this is the case, there are two possible approaches.

First approach:

If the field is just an additional attribute and not used in another calculations/logic processing, you can use the SELECT * to ensure all possible columns of that table are available in the local jobs of the difference systems.

Then in the global jobs, rather than using SELECT * FROM …, you would instead select the required columns for that table from where they all exist, and in case they do not replace the table.field with NULL (for string) or 0.0 (for floats / ints). The NULL vs String is important in PQL later: if you are summing up that field and a NULL is present, then this can return strange errors.

Second approach:

If the fields are required for logical processing and calculations, then this is a case where it makes sense to ‘Fork’ the template transformation. This would be done by copying the transformation to a regular task and then adding the specific logic required for those fields. It would also be possible to then make this new transformation a template as well, such that it could be used in other source systems where the same logic is required, while the old template is still used in the other source systems.