Troubleshooting

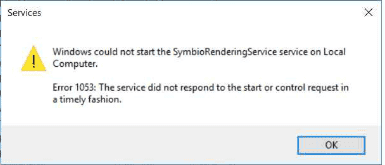

If the rendering service cannot be started, you may see the following error 1053 in Services message:

Solution

Go to Start > Run and then enter regedit.

Navigate to: **HKEY_LOCAL_MACHINE*.

Richt-click on the control folder in the pane on the right and select “new DWORD Value”.

Name the new DWORD:

ServicesPipeTimeoutRight-click

ServicesPipeTimeout, and then click “Modify”.Click

Decimal, enter “180000”, and then click OK.Go to Start > Run and enter services.msc to run the Services control panel.

Select the Celonis Process Management Rendering Service and start it.

If the service is running, switch back to verification and export the process manual.

If the rendering service cannot be started after this Registry fix, check in EventViewer to see if a FileNotFound exception occurred.

Solution

Install the missing Microsoft Visual C++ from here.

Start Celonis Process Management Rendering Service by going to Start > Run and entering services.msc.